For macroeconomists

I finally got round to reading this paper by Iván Werning - Managing a Liquidity

Trap: Monetary and Fiscal Policy. It takes the canonical New Keynesian model,

puts it into continuous time, and looks at optimal monetary and fiscal policy

when there is a liquidity trap. (To be precise: a period where real interest

rates are above their natural level because nominal interest rates cannot be

negative). I would say it clarifies rather than overturns what we already know,

but I found some of the clarifications rather interesting. Here are just two.

1) Monetary policy alone. The optimum commitment

(Krugman/Eggertsson and Woodford) [1] policy of creating a boom after the

liquidity trap period might (or might not) generate a path for inflation where

inflation is always above target (taken as zero). Here is a picture from the

paper, where the output gap is on the vertical axis and inflation the

horizontal, and we plot the economy through time. The black dots are the

economy under optimal discretionary policy, and the blue under commitment, and

in both cases the economy ends up at the bliss point of a zero gap and zero

inflation.

In this experiment real interest rates are above their natural

level (i.e. the liquidity trap lasts) for T periods, and everything after this

shock is known. Under discretionary policy, both output and inflation are too

low for as long as the liquidity trap lasts. In this case output starts off 11%

below its natural level, and inflation about 5% below. The optimal commitment

policy creates a positive output gap after the liquidity trap period (after T).

Inflation in the NK Phillips curve is just the integral of future output gaps,

so inflation could be positive immediately after the shock: here it happens to

be zero. As we move forward in time some of the negative output gaps disappear

from the integral, and so inflation rises.

It makes sense, as Werning suggests, to focus on the output

gap. Think of the causality involved, which goes: real rates - output gap (with

forward integration) - inflation (with forward integration), which then feedback

on to real rates. Optimum policy must involve an initial negative output gap

for sure, followed by a positive output gap, but inflation need not necessarily

be negative at any point.

There are other consequences. Although the optimal commitment

policy involves creating a positive output gap in the future, which implies keeping

real interest rates below their natural level for a period after T, as

inflation is higher so could nominal rates be higher. As a result, at any point

in time the nominal rate on a sufficiently long term bond could also be higher

(page 16).

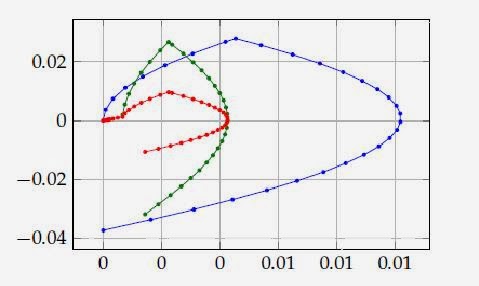

2) Adding fiscal policy. The paper considers adding government

spending as a fiscal instrument. It makes an interesting distinction between ‘opportunistic’

and ‘stimulus’ changes in government spending, but I do not think I need that

for what follows, so hopefully it will be for a later post. What I had not taken

on board is that the optimal path for government spending might involve a

prolonged period where government spending is lower (below its natural level).

Here is another picture from the paper.

The blue line is the optimal commitment policy without any

fiscal action: the same pattern as in the previous figure. The red line is the

path for output and inflation with optimal government spending, and the green

line is the path for the consumption gap rather than the output gap in that

second case. The vertical difference between red and green is what is happening

to government spending.

The first point is that using fiscal policy leads to a distinct

improvement. We need much less excess inflation, and the output gap is always

smaller. The second is that although initially government spending is positive,

it becomes negative when the output gap is itself positive i.e. beyond T. Why

is this?

Our initial intuition might be that government spending should

just ‘plug the gap’ generated by the liquidity trap, giving us a zero output

gap throughout. Then there would be no need for an expansionary monetary policy

after the gap - fiscal policy could completely stabilise the economy during the

liquidity gap period. This will give us declining government spending, because

the gap itself declines. (Even if the real interest rate is too high by a

constant amount in the liquidity trap, consumption cumulates this forward.)

This intuition is not correct partly because using the

government spending instrument has costs: we move away from the optimal

allocation of public goods. So fiscal policy does not dominate (eliminate the

need for) the Krugman/ Eggertsson and Woodford monetary policy, and optimal

policy will involve a mixture of the two. That in turn means we will still get,

under an optimal commitment policy, a period after the liquidity trap when

there will be a positive consumption gap.

The benefit of the positive consumption gap after the liquidity

trap, and the associated lower real rate, is that it raises consumption in the

liquidity gap period compared to what it might otherwise have been. The cost is

higher inflation in the post liquidity trap period. But inflation depends on

the output gap, not just the consumption gap. So we can improve the trade-off

by lowering government spending in the post liquidity trap period.

Two final points on what the paper reaffirms. First, even with

the most optimistic (commitment) monetary policy, fiscal policy has an

important role in a liquidity trap. Those who still believe that monetary

activism is all you need in a liquidity trap must be using a different

framework. Second, the gains to trying to implement something like the

commitment policy are large. Yet everywhere monetary policy seems to be trying

to follow the discretionary rather than commitment policy: there is no discussion

of allowing the output gap to become positive once the liquidity trap is over,

and rules that might mimic

the commitment policy are off the table. [2] I wonder if macroeconomists in 20

years time will look back on this period with the same bewilderment that we now

look back on monetary policy in the early 1930s or 1970s?

[1] Krugman, Paul. 1998. “It’s Baaack!

Japan’s Slump and the Return of the Liquidity Trap.” BPEA, 2:1998, 137–87. Gauti B. Eggertsson & Michael

Woodford, 2003. "The Zero Bound on

Interest Rates and Optimal Monetary Policy,"Brookings Papers on Economic Activity, Economic Studies

Program, The Brookings Institution, vol. 34(1), pages 139-235.

[2] Allowing inflation to rise a little bit above target while

the output gap is still negative is quite consistent with following a

discretionary policy. I think some people believe that monetary policy in the

US might be secretly intending to follow the Krugman/Eggertsson and Woodford strategy, but as

the whole point about this strategy is to influence expectations, keeping it

secret would be worse than pointless.